As AI becomes increasingly embedded in business operations, more founders are adopting agents—automated systems powered by large language models (LLMs) or task-specific components.

Improving your Agent

However, launching an agent is just the beginning. Continuous improvement is key to ensuring it delivers real value.

Here’s a practical, non-technical guide to setting up a robust evaluation and improvement process for your AI agent.

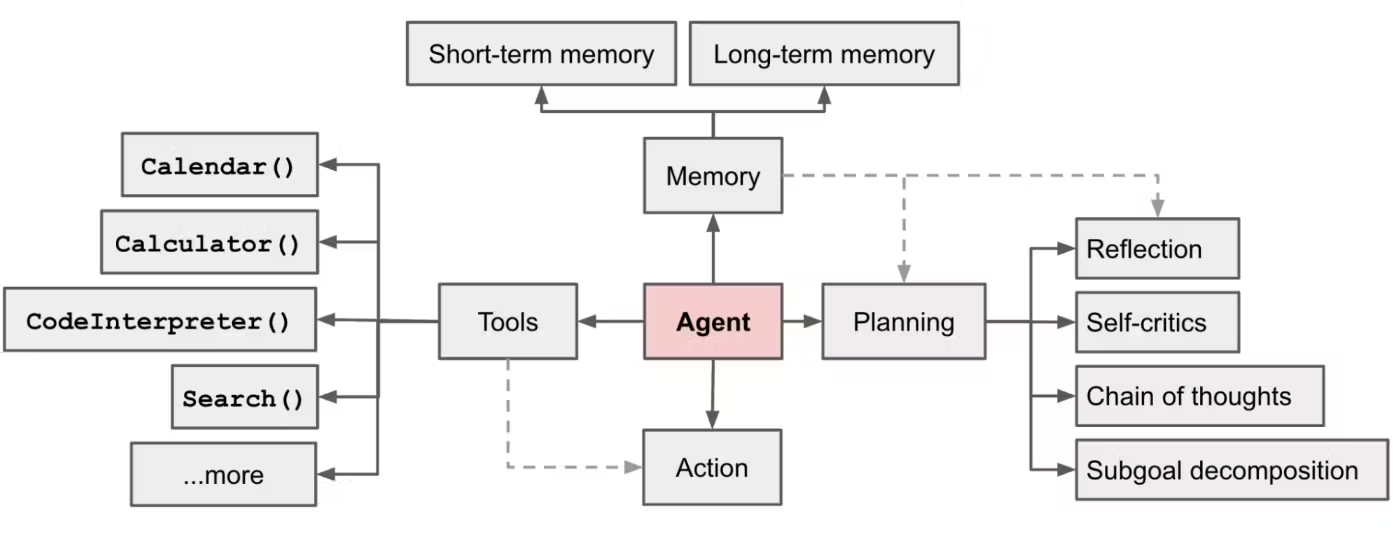

Fig. 1. Overview of a general LLM-powered autonomous agent system.

1. Set Up an Evaluation Strategy from the Start

An AI agent isn’t just one big block of code. It comprises multiple components (like prompt generators, classifiers, or search tools) that together create the final output. To improve your agent, you need to measure not only the end result but also the performance of each step within the process.

Your evaluation strategy should consist of:

- Final Output Evaluation: Does the response solve the user’s problem?

- Component-Level Evaluation: Are individual modules working as expected? (e.g., did the retrieval tool fetch the correct data?)

- Trajectory Evaluation: Was the sequence of actions efficient and logical?

The goal is to track these metrics over time to understand which areas need optimization—whether it’s improving the end response, fine-tuning individual components, or adjusting the entire workflow. LangChain has a great guide for this with a concrete example.

2. Evaluate the Final Agent Output

The final output is what your users interact with, so this should be your first focus.

- Use success criteria: Define KPIs aligned with business needs, such as response relevance, accuracy, utility, or customer satisfaction (e.g., NPS scores).

- Collect feedback: Get explicit feedback from end-users or testers.

- Set up automated scoring mechanisms: You can have judge prompts to grade the final answer. You can use a judge prompt to check for specific dimensions like relevance, tone etc.

Pro Tip: Establish a ground truth dataset to compare the agent’s responses against ideal outcomes.

3. Evaluate Each Step of the Agent’s Workflow

An AI agent’s output is only as good as its components and decision-making steps. A poor response might not indicate a faulty agent—it could point to a suboptimal intermediate step.

- Evaluate modular components: Look at the outputs from individual steps, such as data retrieval, intent detection, or decision-making logic.

- Debugging trajectories: Trace how the agent arrived at its conclusion. If the reasoning path (or trajectory) is flawed, adjust the sequence of operations to make the agent more effective.

4. Human Oversight for Scalability and Reliability

Human oversight is essential for ensuring the quality of agent outputs, especially in high-stakes applications like healthcare or legal advice. It also plays a critical role incontinuous learning and trust-building. There are two primary ways to integrate humans in the loop:

Auditing Agent Outputs:

- You can create a system where human reviewers randomly audit the agent’s outputs, rating them for correctness and usefulness.

- You could also build an Auditor Agent that can run checks and validates your agent logs

- This can be a simple tool that has access to google search and customer knowledge bases to check for incorrect suggestions and hallucinations

- Scalable review systems can rely on SMEs (subject matter experts) for domain-specific evaluations. The data gathered from these audits can pinpoint weak areas that need further improvement.

Human-In-the-Loop (HITL) Approval System:

- In scenarios requiring high precision, agents can assist human operators. The agent makes recommendations, which the human approves or rejects.

- This approach creates a feedback loop—human corrections become valuable training data that improves the agent over time.

Example: Foundry’s internal Auditor Agent identified a potential issue with the customer agent. It used the customer’s knowledge to identify an incorrect claim.

When we hover over the highlighted span, you can see the auditor agent has identified a subtle issue that would be hard for a human to find alone. This can help a human reviewer find more mistakes than they would alone. They can also accept and reject the auditor agent’s flags to ensure high precision.

5. How to Use Evaluation Data for Continuous Improvement

The data you gather from audits, HITL workflows, and component evaluations becomes the foundation for continuous improvement. Here’s how you can leverage it:

- Prompt Optimization: Use the feedback and audit results to rewrite or auto-optimize prompts that guide the agent’s behavior. For automatic prompt optimization, use an open-source tool like AdalFlow.

- Few-Shot Learning: Incorporate the best examples from your data as few-shot examples to enhance your agent’s performance for specific tasks.

- Component Tuning: If an individual step (like intent recognition) performs poorly, you can modify, replace, or retrain that module.

- LLM Fine-Tuning: When necessary, fine-tune the underlying LLM on domain-specific data to improve accuracy and relevance in your specific use case. This can be done periodically to ensure your agent stays up-to-date.

- Planning: If you are noticing your agents have bad trajectories meaning it doesn’t take the right action in the right order, you may want to look into planning. Many research methods show that having the LM come up with a high level plan initially dramatically boosts performance and improves trajectories. You can use your evaluation data to check if the trajectories have improved.

6. Aligning with Business Goals and Scaling Operations

Improving an agent isn’t just about technical refinement—it’s also about ensuring the system stays aligned with your business goals. You can track progress over time using metrics that directly relate to your business, such as customer satisfaction, task completion rates, or time saved.

Once you’ve fine-tuned the agent and have reliable workflows, you can scale the system by:

- Onboarding more reviewers or SMEs to audit outputs at scale.

- Automating portions of the review process (e.g., auto-flagging anomalies).

- Deploying multiple agents across different functions or departments, leveraging the same optimization techniques to replicate success.

Final Thoughts

Improving your AI agent is an ongoing process, but it becomes manageable—and even scalable—when you break it down into clear steps:

- Set up an evaluation framework from the start.

- Evaluate the final agent response, components, and trajectory separately.

- Integrate human oversight, either through audits or human-in-the-loop workflows.

- Use the evaluation data to fine-tune components, prompts, and the underlying LLM when necessary.

With the right systems in place, you can ensure your agent evolves to meet both operational needs and user expectations. Leveraging tools like AdalFlow and prompt optimization techniques, along with human expertise, allows you to create a sustainable feedback loop that drives continuous improvement.

By following this structured approach, your agent won’t just work—it will keep getting smarter, more efficient, and better aligned with your business goals.

Don’t worry if the process feels a bit technical at times—what’s important is establishing the right evaluation and feedback systems from day one. Over time, these systems will help your agent evolve in ways you never thought possible.

If you wanna talk to us about how you can set this up for your business, feel free to reach out to us at contact@neonrev.com

Good luck, and let the iteration begin! 🚀